Asymptotically uniform FAB p-values

Peter Hoff

2019-07-23

exampleLogistic.RmdSummary

This is a simulation study in which adaptive FAB \(p\)-values are constructed for logistic regression coefficients. This document serves as the replication code for the example in Section 4.3 of the article “Smaller \(p\)-values via indirect information” (Hoff 2019).

Linking model

The linking model we consider here is that the logistic regression coefficients \(\theta_1,\ldots, \theta_p\) are i.i.d. from a mixture of a point-mass distribution at zero and a normal distribution with some mean and variance.

Here is a function that estimates the parameters in the linking model. More generally, it obtains MLEs of \(\mu\) and \(\tau^2\) under the model \[ \begin{align*} y & \sim N_p( \theta , \sigma^2 I) \\ \theta_1,\ldots, \theta_p &\sim i.i.d\ (1-\pi) \delta_0(\theta) + \text{dnorm}(\theta, \mu,\tau) \end{align*} \]

fitMM<-function(y,s2){

obj<-function(gam){

pi<-gam[1] ; mu<-gam[2] ; t2<-gam[3]

-sum( log( pi*dnorm(y,mu,sqrt(t2+s2)) + (1-pi)*dnorm(y,0,sqrt(s2))) )

}

mut2<-optim( c(.9,mean(y),var(y)),obj,method="L-BFGS-B",

lower=c(.001,-Inf,.001),upper=c(.999,Inf,Inf))$par[2:3]

names(mut2)<-c("mu","t2")

mut2

}For this example, for each \(\theta_j\) we will plug-in an estimate \(\hat\theta_{-j}\) of the vector \(\theta_{-j}\) using data that is independent (asymptotically) of \(\hat\theta_j\).

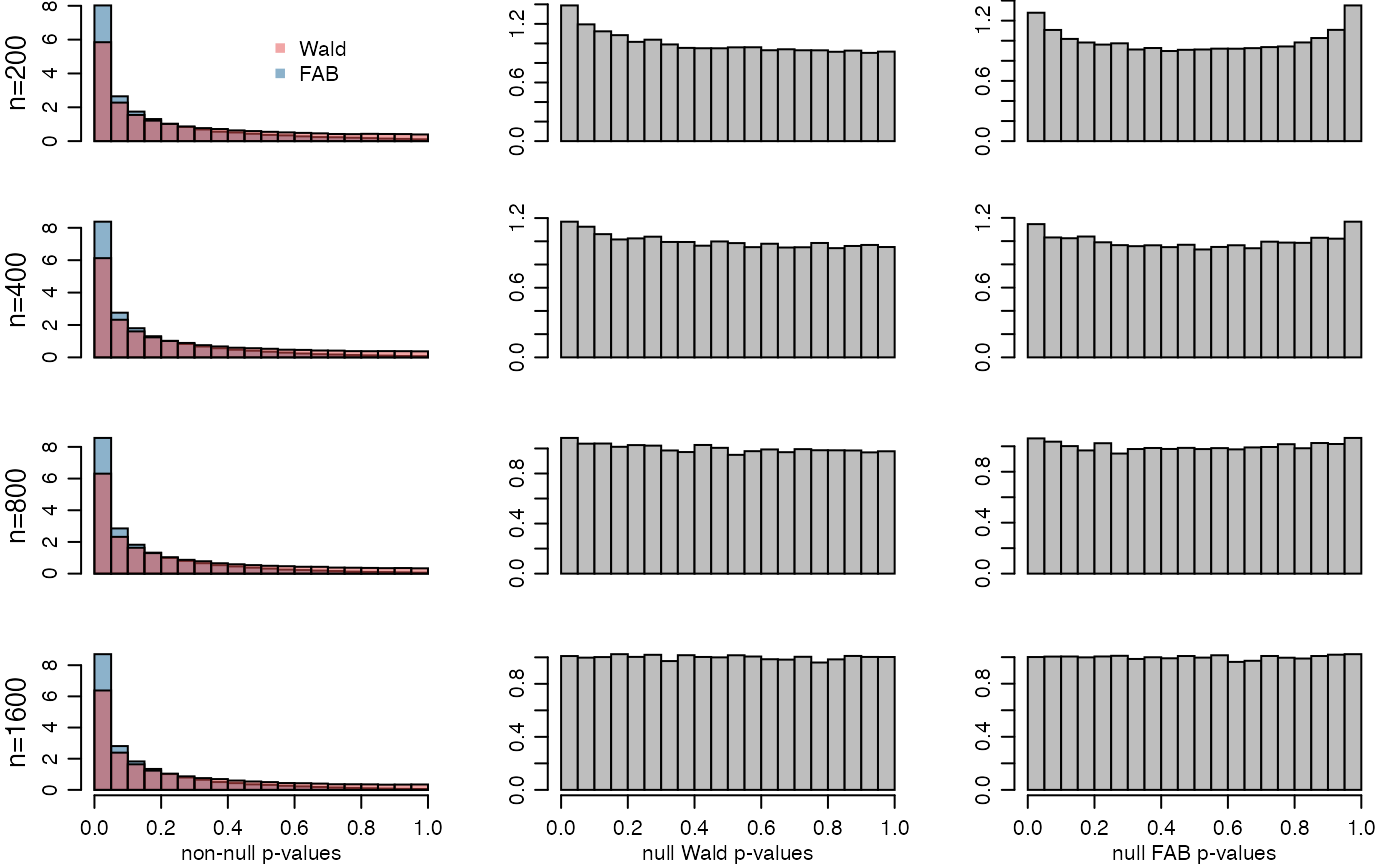

Here is the simulation:

set.seed(1)

p<-30

theta0<-rep(c(3,0),times=c(p/2,p/2))

nvals<-c(200,400,800,1600)

nsim<-5000

histW0<-histF0<-histW1<-histF1<-list()

sigTN<-sigNN<-NULL

par(mfrow=c(4,3),mar=c(3,3,1,1),mgp=c(1.75,.75,0))

for(k in 1:length(nvals)){

n<-nvals[k]

PF<-PW<-NULL

for(s in 1:nsim){

X<-matrix(rnorm(n*p),n,p)

lp<-X%*%theta0/sqrt(n)

y<-rbinom(n,1,1/(1+exp(-lp)))

fit<-glm(y~-1+X,family="binomial")

theta<-fit$coef

Sigma<-summary(fit)$cov.scaled

s2<-diag(Sigma)

MUT2<-NULL

for(j in 1:p){

G<-MASS::Null(Sigma[,j])

MUT2<-rbind(MUT2,fitMM( (G%*%(t(G)%*%theta))[-j],s2[-j]) )

}

z<-theta/sqrt(s2)

pW<-1-abs( pnorm(z)-pnorm(-z))

pF<-1-abs( pnorm(z+2*sqrt(s2)*MUT2[,1]/MUT2[,2]) - pnorm(-z) )

PF<-rbind(PF,pF) ; PW<-rbind(PW,pW)

}

histW0[[k]]<-hist(PW[,theta0==0],plot=FALSE)

histW1[[k]]<-hist(PW[,theta0!=0],plot=FALSE)

histF0[[k]]<-hist(PF[,theta0==0],plot=FALSE)

histF1[[k]]<-hist(PF[,theta0!=0],plot=FALSE)

hist(PF[,theta0!=0],col=rgb(.3,.3,.3,.5),prob=TRUE)

hist(PW[,theta0!=0],col=rgb(.8,.2,.5,.5),add=TRUE,prob=TRUE)

hist(PF[,theta0==0])

hist(PW[,theta0==0])

pW<-mean(PW[,theta0==0]<.05) ; pF<-mean(PF[,theta0==0]<.05)

sigTN<-cbind(sigTN,c(pW,pF))

pW<-mean(PW[,theta0!=0]<.05) ; pF<-mean(PF[,theta0!=0]<.05)

sigNN<-cbind(sigNN,c(pW,pF))

}

Here we save the results:

colnames(sigTN)<-colnames(sigNN)<-nvals

rownames(sigTN)<-rownames(sigNN)<-c("pW","pF")

names(histW0)<-names(histW1)<-nvals

names(histF0)<-names(histF1)<-nvals

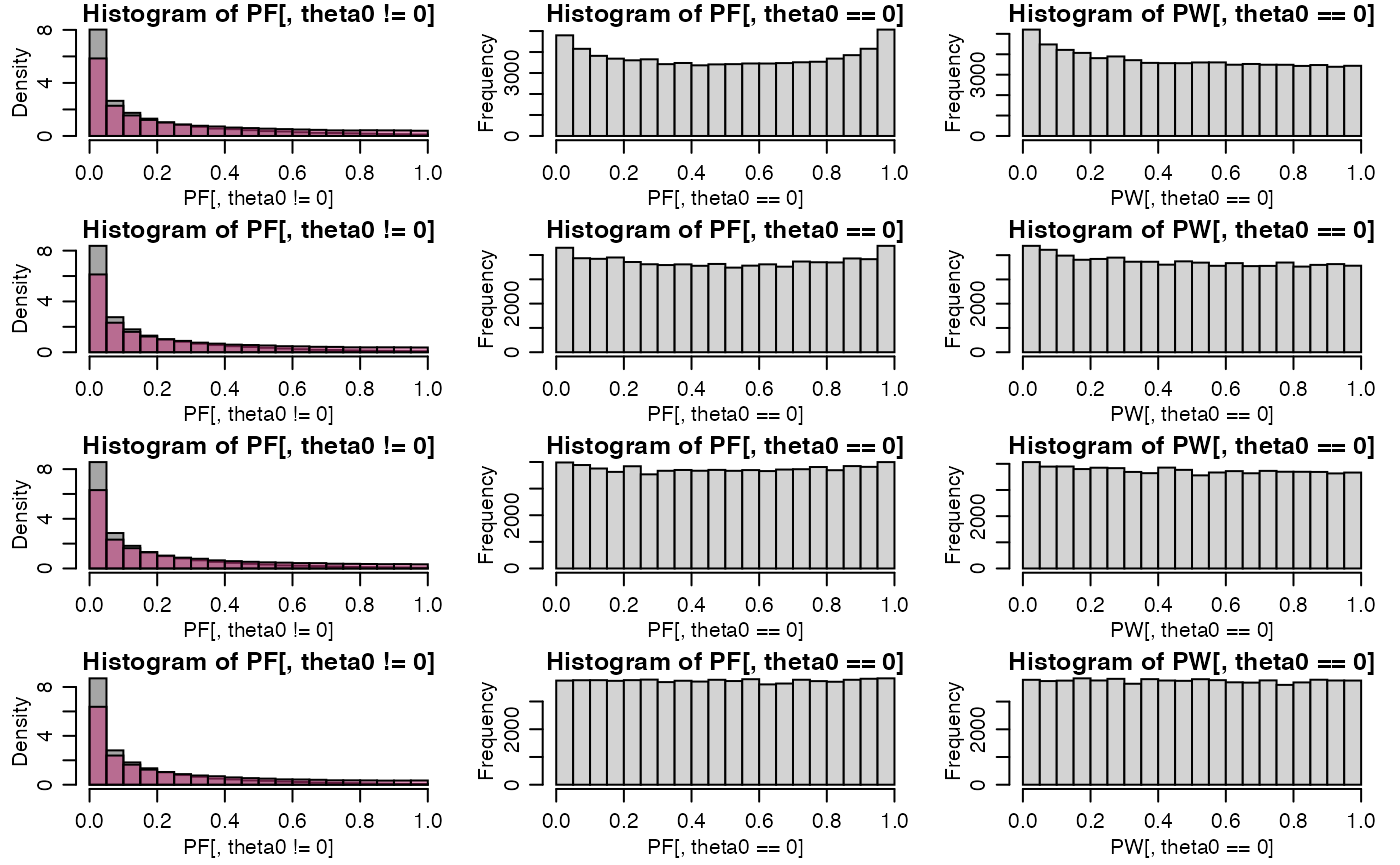

save(sigTN,sigNN,histW0,histW1,histF0,histF1,file="resultsLogistic.RData") Here are the fractions of true-null \(p\)-values below 0.05:

sigTN## 200 400 800 1600

## pW 0.06944000 0.05845333 0.05421333 0.05048

## pF 0.06394667 0.05730667 0.05309333 0.05004Here are the fractions of non-null \(p\)-values below 0.05:

sigNN## 200 400 800 1600

## pW 0.2923733 0.3062933 0.3154933 0.3189067

## pF 0.4010933 0.4189067 0.4282800 0.4353733Here are the plots from the article:

par(mfrow=c(4,3),mar=c(2.75,3.2,0,1),mgp=c(1.75,.75,0))

for(k in 1:4){

xlab<-1*(k==4) + 1

plot(histF1[[k]],col=rgb(.1,.4,.6,.5),main="",freq=FALSE,

xlab=c("","non-null p-values")[xlab],ylab="",xaxt=c("n","s")[xlab] )

plot(histW1[[k]],col=rgb(.9,.3,.3,.5),add=TRUE,freq=FALSE)

mtext(paste0("n=",names(histF1)[k]),2,line=2,cex=.85)

if(k==1){

legend(.5,7,legend=c("Wald","FAB"),pch=15,col=c(rgb(.9,.3,.3,.5),rgb(.1,.4,.6,.5)),bty="n")

}

plot(histW0[[k]],col="gray",main="",freq=FALSE,

xlab=c("","null Wald p-values")[xlab],ylab="",xaxt=c("n","s")[xlab])

plot(histF0[[k]],col="gray",main="",freq=FALSE,

xlab=c("","null FAB p-values")[xlab],ylab="",xaxt=c("n","s")[xlab])

}